This output can be useful in applications such as medical diagnosis, where the confidence of the model in predicting the correct diagnosis is crucial. Provides Probabilistic OutputsĬross-entropy provides a probabilistic output, which can be interpreted as the confidence of the model in predicting each class. Cross-entropy handles class imbalance well by assigning higher weights to the underrepresented classes, making the model more accurate. In classification tasks, it is common to have class imbalance, where some classes have more samples than others. Here are some of the benefits of using cross-entropy: 1. Benefits of Using Cross-EntropyĬross-entropy has several benefits over other loss functions, such as mean squared error (MSE), which is commonly used in regression tasks. Finally, we train the model using a dataloader that provides inputs and true labels. We then define the cross-entropy loss function and the optimizer. In the above example, we define a model that predicts the probability distribution over 5 classes. zero_grad () outputs = model ( inputs ) loss = criterion ( outputs, labels ) loss. parameters (), lr = 0.01 ) # Train the model for inputs, labels in dataloader : optimizer. CrossEntropyLoss () # Define the optimizer optimizer = optim. Softmax ( dim = 1 ) ) # Define the loss function criterion = nn. Import torch.nn as nn import torch.optim as optim # Define a model that predicts the probability distribution over the classes model = nn.

PYTORCH CROSS ENTROPY LOSS HOW TO

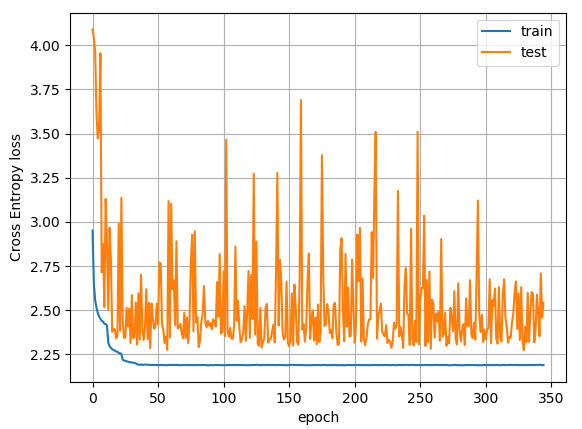

Here is an example of how to use cross-entropy in PyTorch: We then define a loss function that takes the predicted distribution and the true labels as inputs and calculates the cross-entropy loss. To use cross-entropy in PyTorch, we first need to define a model that predicts the probability distribution over the classes. PyTorch provides several built-in loss functions, including cross-entropy. PyTorch is a popular open-source machine learning framework that provides a variety of tools for building and training machine learning models. When the predicted probability distribution is closer to the actual distribution, the cross-entropy loss is lower, and the model is considered to be more accurate.

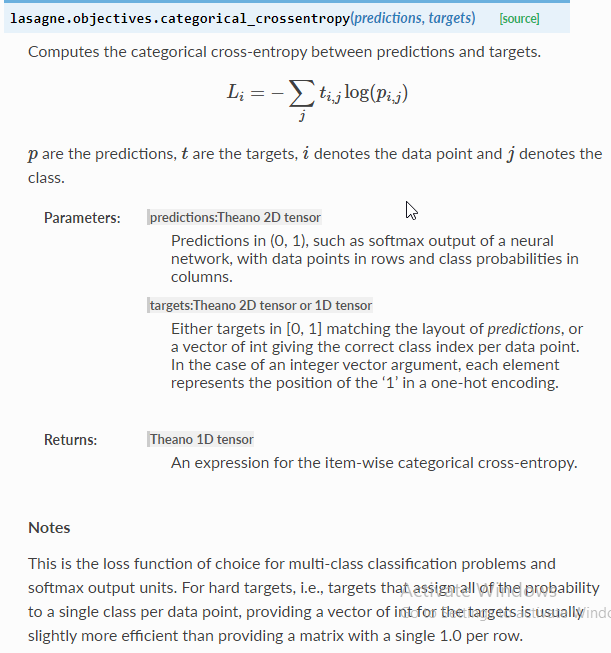

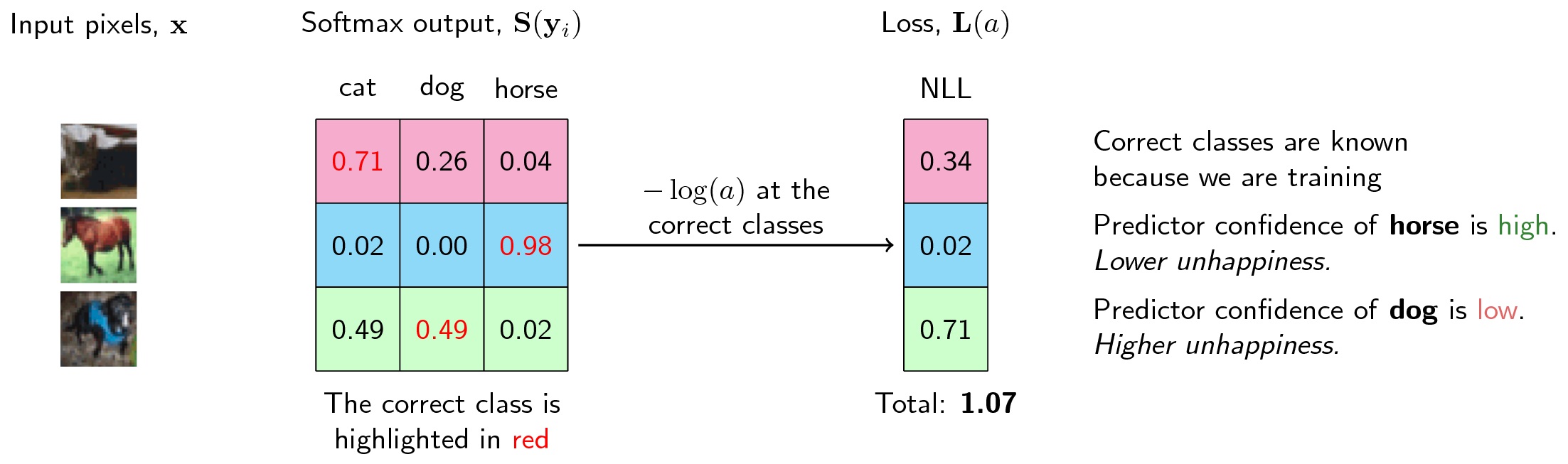

In simple terms, cross-entropy measures how well the predicted probability distribution matches the actual distribution. In the context of machine learning, it is commonly used in classification tasks to measure the difference between the predicted probability distribution and the true probability distribution of the labels. What is Cross-Entropy?Ĭross-entropy is a loss function that measures the difference between two probability distributions. In this blog post, we will discuss the concept of cross-entropy, its implementation in PyTorch, and its benefits in improving the accuracy of machine learning models.

Cross-entropy is one such loss function, which is widely used in classification tasks.

PYTORCH CROSS ENTROPY LOSS SOFTWARE

| Miscellaneous Understanding Cross Entropy in PyTorchĪs a data scientist or software engineer, you are expected to work with machine learning models and deal with various loss functions.

0 kommentar(er)

0 kommentar(er)